Wind speed forecasting dataset

Datasets used to train a Deep Learning model for estimating the day-ahead wind speed at hourly basis. Developed by AMIGO s.r.l. for the ARIA project, part of the I-NERGY 2nd Open Call.

Physical AI refers to using AI techniques to solve problems that involve direct interaction with the physical world, e.g., by observing the world through sensors or by modifying the world through actuators. The data is generated from various sources, including physical sensors and ”human sources,” such as social networks or smartphones. Actuation may range from support to human decisions to managing automated devices(e.g., traffic lights, gates) and actively directing autonomous cars, drones, etc.

One intrinsic feature of Physical AI is the uncertainty associated with the acquired information, its incompleteness, and the uncertainty about the effects of actions over (physical) systems that share the environment with humans. In other words, Physical AI deals with unreliable, heterogeneous, and high-dimensional sources of data/information and a significant set of actuation variables/actions to learn models, detect events, or classify situations, to name just a few cases. In some cases, a decision-making loop is closed over physical systems with their dynamics, often complicated and challenging to model (e.g., weather dynamics, human crowd behavior).

To tackle such large physical problems, existing techniques for data processing and decision-making are not tractable. Thus, one should develop and improve methods that exploit redundancy, combine/infer partial/missing data, transfer knowledge (e.g., through learning) and exploit low-rank characteristics of data to reduce the several relevant dimensions of the problems (in terms of observation, state and action spaces).

Datasets used to train a Deep Learning model for estimating the day-ahead wind speed at hourly basis. Developed by AMIGO s.r.l. for the ARIA project, part of the I-NERGY 2nd Open Call.

A dataset containing real data related to energy consumption from 3 industrial sites in EU

3 Clinical Use Cases with supporting databases are offered to the community

Recognizing existing visual concepts from an image or a video

This asset implements Openpose, a widely used open-source human pose estimation algorithm, as a containerized gRPC service.

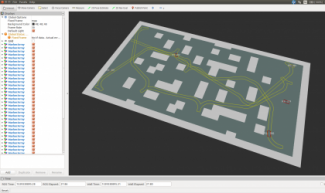

Most of the available solutions for creating digital twins force industry solution architects to resort to ad hoc implementations and models. These solutions lack reusability, scalability, and extensibility, which prevents the introduction of a human digi...

A collection of 250 images taken in a vineyard in Ribera de Duero, annotated using bounding boxes, to train and validate object detection models.

CUSUM RLS filter contains a change detection algorithm for multiple sensors, using the Recursive Least Squares (RLS) and Cumulative Sum (CUSUM) methods [F. Gustafsson. Adaptive Filtering and Change Detection. John Willey & Sons, LTD 2000].

An AI resource to track "group-leader" social interactions within videos

AI-RON MAN Wildfire Hazard Risk Assessment scheduled pipeline to continuously update Thermal Anomalies predictions

Witoil Cloud for iMagine performs an oil spill simulation with parameters that were enhanced by AI (bayesian optimisation) anywhere in the world. Users require only to register within Copernicus Marine Services and Climate Data Store from ECMWF, so enviro...