A Simple Guide to Explainable AI

This page is meant to be an accessible entry point to what is meant by “Explainable AI”, and to the resources on Explainable AI that are available in the AI4EU AI on-demand platform. This guide is part of the broader AI4EU scientific vision on “Human-centered AI”, available here.

What is "Explainable AI"?

Artificial Intelligence (AI) systems have sophisticated decision support mechanisms based on an enormous amount of data that are processed and synthesized efficiently into complex models. However, due to either their internal encoding or their level of complexity, many of these models can be described as black boxes that are not accessible to humans.

Explainable AI (XAI) investigates methods for analyzing or complementing AI models to make the internal logic and output of algorithms transparent and interpretable, making these processes humanly understandable and meaningful.

Some might dispute the need for XAI, and it is true that there is no need for explainability in certain applications. This assumption may well be true in cases where the ultimate goal is the maximum performance, for example in a cat-vs-dog image classifier. This is not the case, however, when these applications concern people's lives and well-being. A system proposing a specific amount of a drug to a patient, or a system suggesting prison time for a defendant must have an element of being questionable and reasonable. Explainability of such systems will provide transparency and allow human experts to be advised, rather than blindly rely on them.

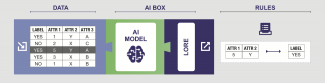

In particular, the explanation technique is strictly linked to the data that the AI model handles. For instance, in machine learning systems trained on tabular data, the explanation methods may highlight the most important features, i.e. the attributes of the table that contributed to a specific decision or prediction. It is also common to present an explanation as a set of rules, in the form of “if-then-else” statements. Datasets containing images, saliency maps, and exemplar-based methodologies may help users to understand the decisions of these complex models. While on planning, visual-based tools that provide users with interactions can increase the reliability of the system.

Example using Titanic Dataset

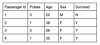

As an example, let us consider the Titanic dataset, reporting the list of passengers on board the day of the disaster. A fragment of the data is presented as well.

A machine learning algorithm is capable of synthesizing a model that can predict if a passenger Survived (i.e. the class) given the observation of the other attributes (i.e. the features). A possible decision tree learned from this dataset is shown below: internal nodes represent decision tests on specific feature’s value, and leaves represent the class assigned to each row of the table. A generic path from the root towards a leaf may be considered as the explanation of the selected class in terms of the features that were traversed along the branch. Thus, this tree representation may be considered a transparent box.

A generic path from the root towards a leaf may be considered as the explanation of the selected class in terms of the features that were traversed along the branch. Thus, this tree representation may be considered a transparent box.

When the size and complexity of the tree grows or the internal encoding of the learned model is not transparent, we talk of black-box models. We encounter and interact with a lot of black-boxes when using automated systems. In finance, decision support systems decide the credit score and loan approval of applicants. In online social networks, algorithms determine the content we are exposed to. In web search engines, ranking models decide the order of the results that we choose from.

Researchers have developed many strategies to open a black-box, i.e. to make it accessible as the decision tree of the Titanic dataset above. One strategy consists of deriving a transparent model that mimics the original black-box, by asking several questions to the opaque box to catch the internal mechanics of its decisions. Another widespread approach consists of explaining each single outcome of the black box, again by repeatedly probing the black box with examples derived from the outcome to explain.

Some asset examples

LioNets

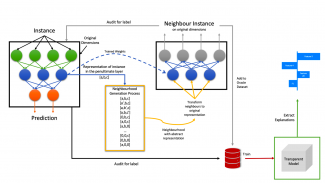

LioNets: Local Interpretations Of Neural Networks through Penultimate Layer Decoding

LORE

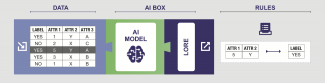

LORE (LOcal Rule-based Explanations) is a model-agnostic explanator for tabular data

Please help us to complete and maintain this document by notifying corrections or addition to the document maintainer, Salvo Rinzivillo.

Note: if you want to add a software resource, data set or researcher to this document, you first need to make sure that they are available in the AI4EU platform, e.g., by publishing the software.

This document is published under the Creative Commons License Attribution 4.0 International (CC BY 4.0). It should be cited as:

-

Salvo Rinzivillo (editor), “A simple guide to Explainable AI”. Published on the AI4EU platform: http://ai4eu.eu. June 24, 2020.