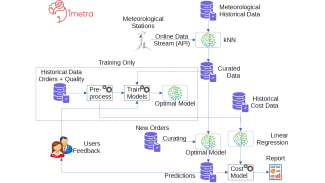

Dimetra.eu: Banana Crown Rot Predictor

An AI consultant to predict Crown Rot (CR) disease in bananas before shipment.

Explainable AI (XAI) investigates methods for analyzing or complementing AI models to make the internal logic and output of algorithms transparent and interpretable, making these processes humanly understandable and meaningful. Some might dispute the need for XAI, and it is true that there is no need for explainability in certain applications. This assumption may well be true in cases where the ultimate goal is the maximum performance, for example in a cat-vs-dog image classifier. This is not the case, however, when these applications concern people's lives and well-being. A system proposing a specific amount of a drug to a patient, or a system suggesting prison time for a defendant must have an element of being questionable and reasonable. Explainability of such systems will provide transparency and allow human experts to be advised, rather than blindly rely on them.

An AI consultant to predict Crown Rot (CR) disease in bananas before shipment.

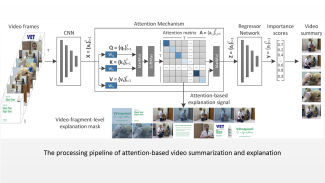

This library lists the outcomes of our research on video summarization and explainable AI-based summarization.

2D Computer Vision and Image Analysis revolutionize very many domains, notably: -Medical/Biological/Dental Image Analysis and Diagnosis, -Autonomous systems perception -Digital Media (video/image) and Social Media Analytics, -Big Visual Data Analyti...

A pipeline generation to get and process images from Sentinel-2 for a specific ROI (Region of interest) to display and rescale NVDI values.

Our dream to make machines sense and perceive (notably see) comes true: nowadays Computer Vision enables diverse applications: -Autonomous Systems (cars, drones, vessels) Perception, -Robotics Perception and Control, -Intelligent Human-Machine Intera...

Human Centered Computing has very many application areas in, e.g.,: -Human-Computer Interfaces and Human-Robot Interfaces -Social Media Analytics -Video Analysis -Affective Computing -Biometrics -Privacy Protection

To boost interpretability with concept vectors, a reverse engineering approach automates concept identification by analyzing the latent space of deep neural networks using Singular Value Decomposition. This framework combines factorization, latent space c...

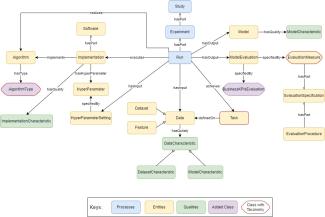

OpenManufacturing ontology extends the OpenML ontology that contains classes for representing different aspects of machine learning. OpenManufacturing extends the base ontology adding two main classes and three taxonomies representing elements relevant to...

This regression model exploits historical data measured from machine sensors to perform inference on future usage and detect possible future faults in the machine itself. Explainability metrics targets sensor groups and are powered by the SHAP library.

Our dream to make machines sense and perceive (notably see) comes true: nowadays Computer Vision enables diverse applications: -Autonomous Systems (cars, drones, vessels) Perception, -Robotics Perception and Control, -Intelligent Human-Machine Intera...