Header

The New Frontiers of European AI Regulation: How We Are Moving Toward Trustworthiness

Since 2018, the European Commission has been discussing the directions of the future of Artificial Intelligence (AI) in Europe. A distinctive trait of its approach is to combine economic boost and ethical principles to enhance the benefit of AI for society and limit the risks associated with these technologies. For this reason, the new proposal of a regulation on Artificial Intelligence settled a new landmark in the development of this strategy. But what is contained in the document and how this new proposal will shape our daily life? In this article, we present here a short outline of the document and offer some stimuli for reflection.

Body

In recent years, the European Commission has strongly committed to fundamental rights and human values in the development and deployment of AI. Indeed the new European legal framework is based on ensuring the safety and safeguard of European people through a risk-based approach. This would mean that the higher the risk for a person’s life and integrity the stricter the rules.

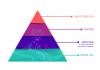

The regulation can be represented in a pyramid formed by 4 categories of risks:

The low-risk AI: This level represents AI systems with minimum or no risk. This includes applications without restriction (e.g video games or spam filters). Even if there are no mandatory obligations for these systems, the commission encourages the providers of low-risk AI to apply the requirements on a voluntary basis.

Limited-risk AI: For AI systems with limited risk, a transparency obligation is proposed. This includes AI applications interacting with humans (e.g. chatbot or voice assistants), manipulating video/image/audio contents (e.g. deep fake) and detecting emotions or categorising people based on biometric data. In this case, people need to be informed, e.g., that they are interacting with an artificial agent or, in case of deep fake, that the content has been generated through automated means.

High-risk AI: This level includes AI that interferes with aspects of personal life. (e.g scoring in education, cv sorting for recruiting or in law enforcement to evaluate the reliability of evidence during investigation). High-risk AI can refer to applications that are a safety component of systems that are subject to a conformity assessment by existing law (e.g. medical devices), or to applications described in a list that will be updated (link to Annex III). For this category of AI, the proposal sets out 7 requirements

- Risk management system: the AI provider should establish and maintain a risk management system to identify, analysis and minimize the risks posed its AI products

- Data and Data Governance: Data needs to be high-quality to avoid biased results or discriminations. Training, validation and testing must be subject to appropriate data governance and management.

- Technical Documentation: A detailed documentation should be provided to describe the AI systems (necessary information is specified in Annex IV) and allow the authorities to assess systems compliance with the requirements.

- Record-keeping: the AI system should be developed in a way that events (logs) are automatically recorded to ensure the traceability of the AI system’s functioning

- Transparency and provision of information to users: Providers must share substantial information with users to help them “understand” and properly use AI systems

- Human oversight: The AI provider need to ensure an appropriate level of human oversight both in the design and implementation of AI systems;

- Accuracy, robustness and cybersecurity: the AI system should be designed and developed in a way that they respect the highest standards of cybersecurity and accuracy.

In concrete terms, to ensure the above requirements, the provider of high-risk AI applications will go through 4 steps before their system is placed in the market:

Step 1) The AI system will be classified as high-risk.

Step 2) Ensure that the AI is compliant with the AI Regulation through a conformity assessment.

Step 3) Registration of stand-alone AI systems in an EU database.

Step 4) AI providers need to sign a declaration of conformity for the AI system in order to receive the CE marking and place the system on the market.

If during the AI system’s life cycle substantial changes happen, the AI provider will go back to step 2.

Unacceptable AI: The last level of the pyramid defines AI application that can cause physical or psychological harm or will influence people beyond their consciousness and/or exploiting vulnerable conditions (e.g. children). These systems are prohibited since they go against fundamental human rights (e.g social scoring or voice assistance in toys)

Once the AI system is released in the market, the regulation will be monitored by the national authorities selected by each Member state. In case of persistent non-compliance, the AI provider will be sanctioned by the authorities.

As the Observatory, we always welcome open and honest debate to understand the challenge we are facing. If you want to contribute to the discussion on the regulations with your opinion, feel free to send us an email at osai@unive.it

To learn more about it:

Proposal for a Regulation on a European approach for Artificial Intelligence

Excellence and trust in Artificial Intelligence

Image by Christian Lue

Figure 1: Pyramid representation of the 4 categories of risk for AI. Original source: European Commission

Figure 2: The 4 steps for AI application to enter in the market. Original source: European Commission